Phil Lewis

Vector Search Basics

With the surge of new start-ups in 2021 touting Vector Search systems, you might be forgiven for thinking that Vector Search is a new type of search that solves a multitude of thorny problems and is the new holy grail of search.

You would be partially correct too, but the reality is that Vector Search was invented way back in the 1950s by English linguist John Rupert Firth and then taken much further by Gerard Salton of Cornell University.

The way it works is that for each document you want to index, instead of creating an inverted index of terms (incidentally also invented around the same time), you create a feature vector that encodes the contents of a document into an ‘n’ dimensional space.

As a comparison, take a document that contains the following:

Keyword indexes will dutifully index the important terms from this ‘document’ and therefore find it when searching for cake or hair. But humans know that this document does not really talk about cake or hair.

A Vector Search system would instead create a vector for this document. It does not need to do it for every word and can be as basic or as complex as you need.

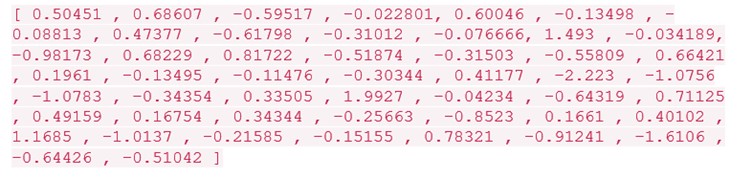

One vector system might create a vector like this for it.

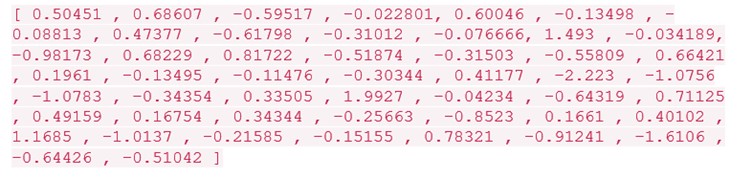

Another, this.

* Notice how the example above knows that “pulling his hair out” is an expression of frustration.

Do not take this for granted, we will come back to this in a moment.

Vectors describing a sentence or paragraph, usually consist of over 300 floating point numbers. The following example is a real-life vector that encodes the term ‘king’ into a vector space that knows something about what kings do, what they stand for and how the term is used in English (GloVe vector).

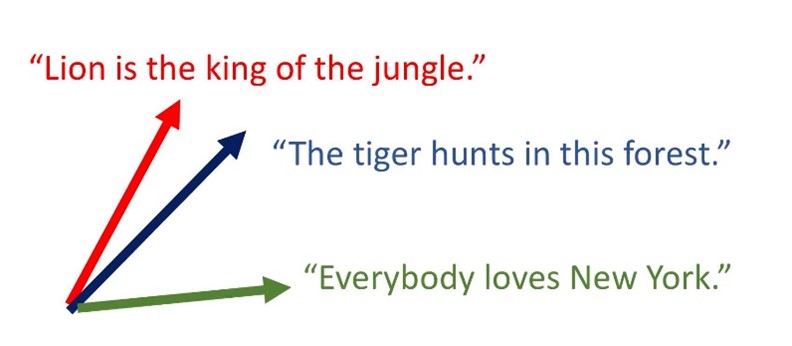

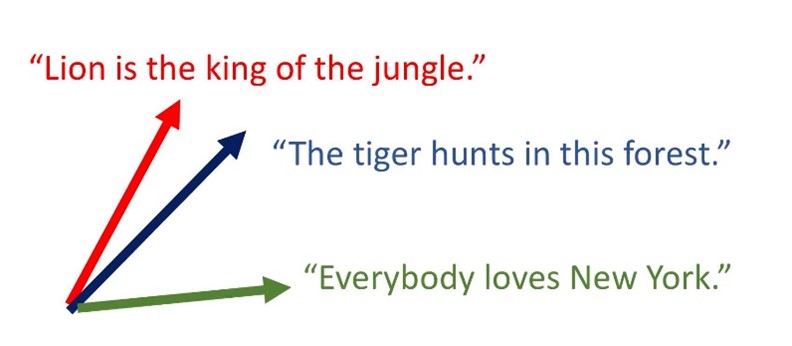

When searching using Vector Search, we perform a cosine similarity calculation to find phrases with similar features.

Remember, Vector Search has been around for nearly 70 years!

So why now?

The reason why this really works now is that modern Natural Language Processing (NLP) systems took a big jump in understanding four years ago when Google open sourced BERT – a way of encoding text as vectors that has a much deeper understanding of terms and how they relate to each other. For example, knowing that ‘pulling his hair out’ actually does not really talk about ‘hair’ or that ‘it’s a piece of cake’ is not about cake.

These new language models produce a very specific set of vectors, much more ‘descriptive’ than we have ever had before because of this research work that Google (and others) have done in recent years.

So, here’s the rub with Vector Search vs Keyword Search

Vector Search does not index the words, just the encoded features.

So, it is not possible to accurately perform a search for only a specific term within the corpus. Forensic searches like “CHARGE” OR “CHARGER” NOT “BATTLE” are not possible with Vector searches.

Vector searches bring something to table that keyword searches alone cannot fulfil; however, they are not the answer to all search use cases. Just like Keyword search systems, Vector Search systems have their own set of foibles and should not be considered as a general replacement for all search use cases. Only blended solutions, those that take keyword searches as seriously as they do vector searches will prevail. Combined with the rapidly expanding world of NLP text encoders we see lots of exciting use cases on the horizon. For now, at least, we must combine these technologies.

But oh, what a glorious combination! Look out for our second part on how we use vectors for examples of why we think combining keyword search and vector search is a glorious combination.

Hope you enjoyed my little rant on Vector Search vs Keyword Search. You may also like another recent blog I wrote, “Did MUM just kill BERT?” about the possible successor to Google BERT and how it will enhance question answering systems.

As always, if you have any questions or want to discuss anything, drop me a line at info@pureinsights.com.

Cheers,

Phil