Fabian Solano

Generative AI tools like OpenAI’s ChatGPT have emerged out of relative obscurity to become a public viral phenomenon. In this blog, we explore how technologies behind this – Large Language Models like GPT-4 – are driving the AI Revolution. We’ll try to illustrate key points as much as possible with examples using the various models.

Machine learning and artificial intelligence concepts have been around for many years. One of the earliest breakthroughs in AI came in 1956, when a group of researchers organized a conference at Dartmouth College to discuss the possibility of creating intelligent machines. This conference is often considered the birth of AI as a field of study.

But it was not until ChatGPT was released back on November 30th, 2022, that it generated a shift in the media and public access to AI technology. What made it so different? Previous advancements in the field were mostly focused on research institutes, universities, big tech and so on. ChatGPT gave full access and control even to non-tech users.

Since then, the tool has become a viral phenomenon, with mentions not just in tech circles, but also popular media. It’s part of the general public consciousness.

With an easy-to-use interface and natural language processing to interact with, people without technical knowledge requirements found real use cases to use the tool and ease day to day tasks in many jobs.

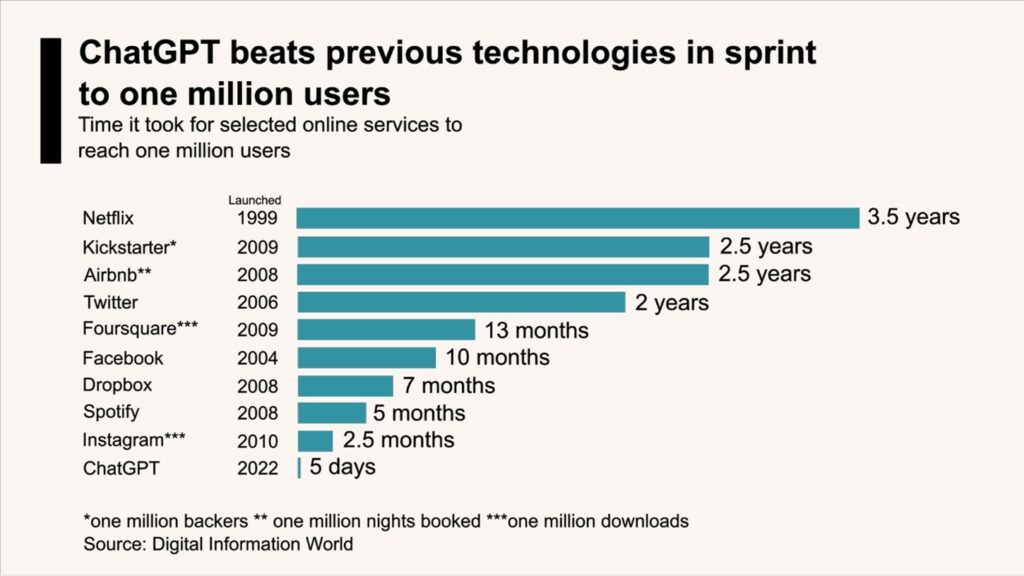

As seen in this graph derived from Digital Information World reports, it took only 5 days for ChatGPT to reach a million users, and it reached over 100 million users by January 2023. Making new technology useful for common applications drives paradigm changes. In the Internet’s early years, few people believed that it was going to last, when in fact, it was about to change everything – products, services, and experiences as we know them today.

Search engines like Google and its simple interface helped drive this change. And now, Natural Language Processing and user interaction with artificial intelligence models are poised to be the next big step.

What makes GPT-4 better than previous versions?

We covered what GPT-3 is in a previous blog. GPT-4 is an improved version over its predecessors, with several key advancements that contribute to its enhanced performance.

It has a larger model size with a significantly larger number of parameters than GPT-3, which means it captures and stores more knowledge and better understands complex language patterns.

It was also trained in higher quality and enormous amounts of data and enhanced with fine-tuning and transfer learning.

However, in its release paper on the competitive landscape and the safety implications of large-scale models like GPT-4, OpenAI provides no further details about the model’s architecture (including model size), hardware, training compute cost, dataset construction, training method, or related facts.

It is interesting how a company that started as an open-source project now cares about competitors and makes profit from the results. Perhaps the big costs involved in training and running this kind of technology have something to do with it, but it is still a notable change in OpenAI’s initial stated objective: “To ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity.”

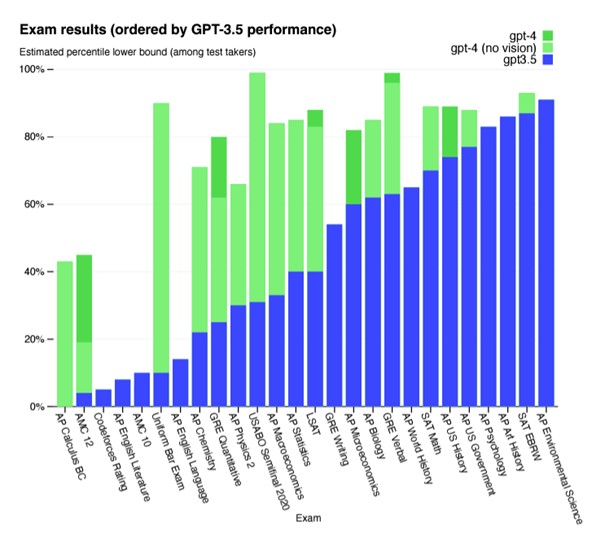

In the paper, there is an interesting comparison in different exam results showing where GPT-4 exceeds the results of previous models. But it does not only perform better on fairly structured exams questions. Newer models are more nuanced.

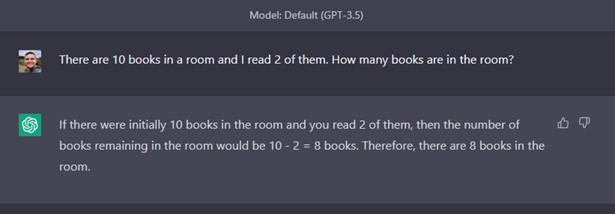

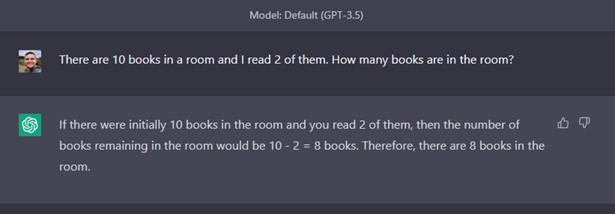

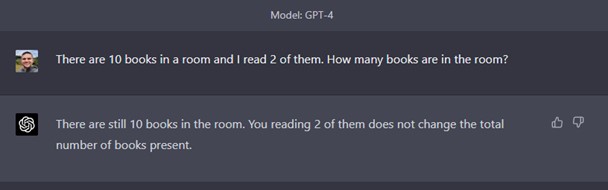

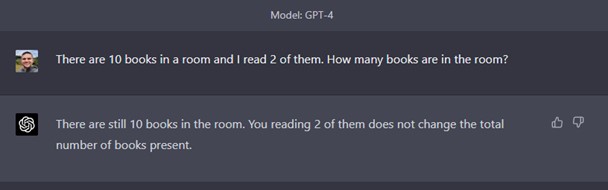

Take for example the following query:

“There are 10 books in a room and I read 2 of them. How many books are in the room?”

The GPT-3.5 model provides a simplistic, direct answer. But more subtle reasoning would imply that, when someone reads a book in a room, the book still exists and does not magically disappear. GPT-4 takes reading comprehension and analysis to a new level by taking this subtlety into account.

More context. More capabilities.

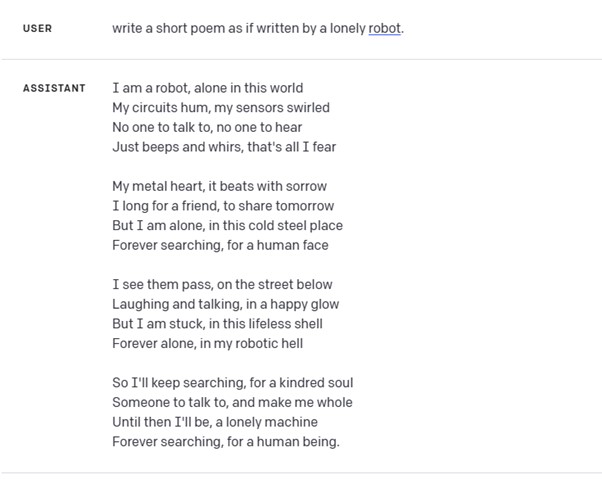

One clear limitation users faced quickly when using ChatGPT, even with the GPT-3.5-turbo model was input size, which was limited to 4k tokens, or about 3,000 words. With GPT-4, OpenAI increased the input limit to 32k context tokens (24,000 words.) Event with the 4k token limit for inputs, the OpenAI models generated interesting outputs very quickly, especially if the user request provided very specific guidelines or parameters for it to work with. Here is an example generated in about 3 seconds using the GPT-3.5 Turbo model.

With an increased limit to 32k input tokens, users are able to provide even more information on a task request to the AI model. They can provide more specific details about objectives, restrictions, and guidelines to enhance the output.

The introduction of an API to the GPT-4 model also empowered developers to begin developing actual applications. One demo that popped up quickly was for a tool to autogenerate lawsuits. Another demo showed how the tool could generate source code to replicate the game of Pong in under 60 seconds. One ambitious thread on Twitter documents an experiment where the user asks GPT-4 to make as much money as possible on a budget of $100.

Multimodal support

Apart from using Large Language Models (LLMs) for generative text, other AI applications have emerged such as Stable Diffusion or Midjourney focused on synthetic AI-generated images. Given a prompt with certain restrictions and guidance, the AI can create new pictures. There are even sites such as photoai.com where it is possible to train the model with custom user pictures to generate unseen photos of the user that have never existed before.

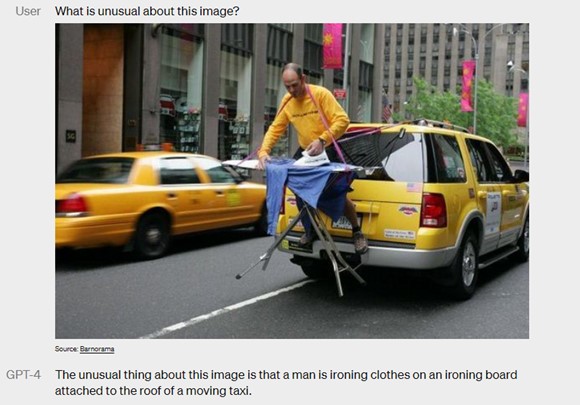

GPT-4 introduced multimodal support, which means it can accept a query composed of text and images as input, compared to text-only in previous models. In the example below, the user submits a photo and asks the AI model to comment on it. It is quite remarkable that the model not only understands the query, but it is able to “understand” or interpret the image and come up with a response indistinguishable from a human.

With this new feature it would be possible to create complex code-generating applications with amazing speed. This might not replace large scale development projects, but it could be used for proof-of-concept projects or rapid prototypes. Imagine, for example, a program that could create a website from a handmade sketch.

Graph Data Analysis with GPT-4

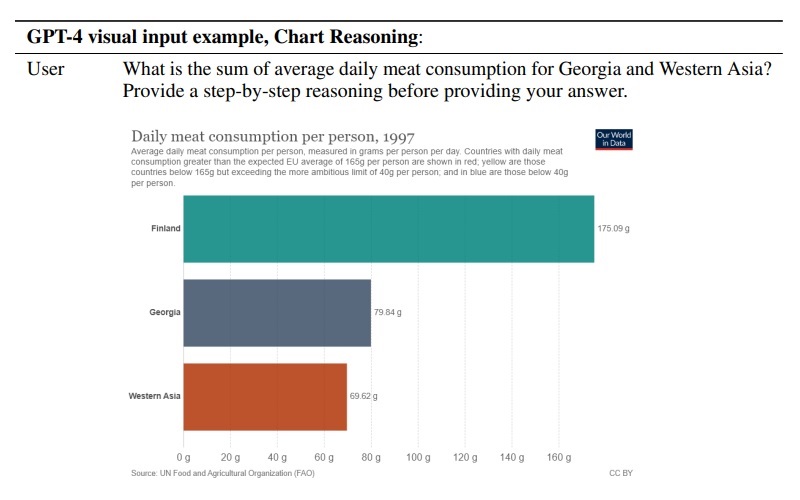

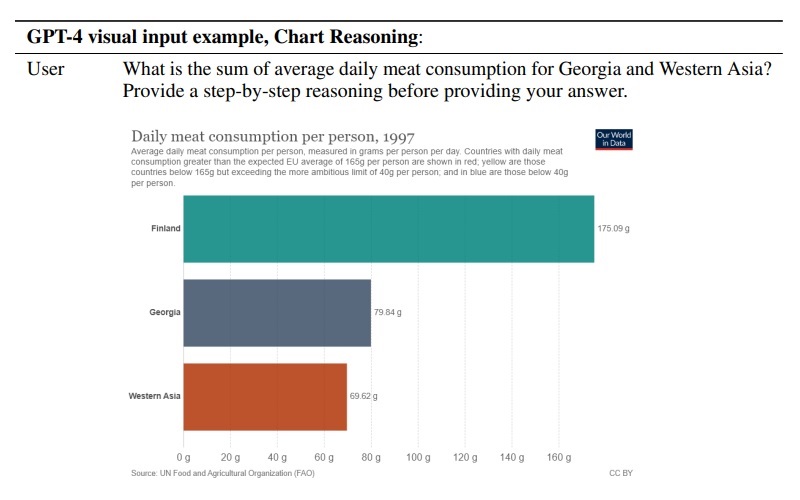

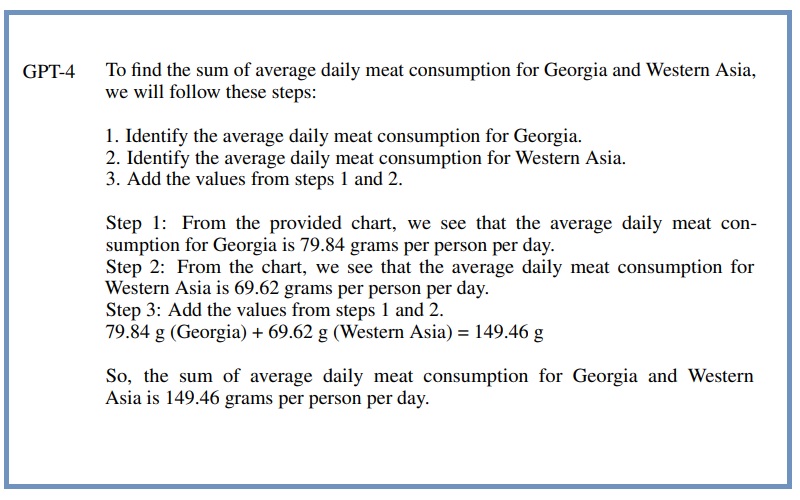

Even though image inputs are still a research preview and not publicly available, concepts proposed by OpenAI go even further. For example, analyzing a plot and providing a step-by-step analysis with a statistically correct answer related to the data presented. But in general, having the ability to provide graphical inputs can greatly expand the utility of the model. It is way easier to provide a picture for a description of a specific situation or data than to write its characteristics as it is always possible to miss something. The example below from the GPT-4 Technical Report is a good example of this ability to do basic analysis of a graph purely from an image.

Can I test it right away? What new features are available?

Currently GPT-4 is only available through OpenAI API with prior enabled access or by paying a subscription to ChatGPT Plus which enables the testing of the model through that interface. However, usage is capped at 25 messages every 3 hours if accessing through the site, which may fluctuate due to high demand for the service. So initially experimentation may be limited to very simple use cases.

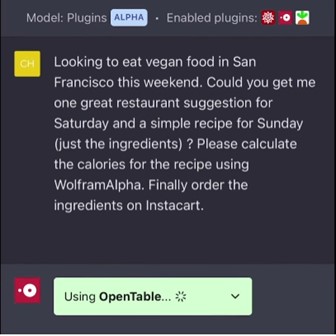

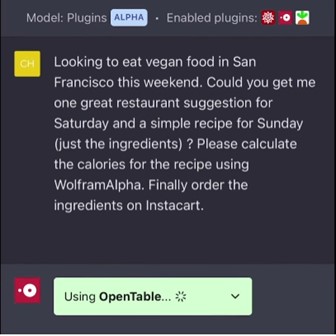

In other recent major developments, OpenAI tools are being integrated into real applications, including Microsoft’s new Bing launched really quickly in recent days. Latest support from OpenAI will enable plugins to be added as part of the experience when using the tool. Plugins are extensions designed specifically for language models with safety as a core principle. They help ChatGPT access up-to-date information, run computations, or integrate with third-party services.

Early plugin examples include one for OpenTable that lets users make a reservation at a restaurant. Another one from InstaCart would enable you to order groceries for a recipe and calculate the calories for it using the Wolfram Alpha plugin (a complex computation not yet available in most general LLMs).

More exciting for me as a developer are plugins that might help me customize or expand the capabilities or domain knowledge of GPT-4. This interacting with a vector database to allow you to tune ChatGPT to use your own content. The plugin fetches the snippet(s) from the database first (can be any text), and then uses that to provide a context for an answer. We could easily enhance our Pureinsights Discovery Platform™ to do the same.

Caution: Is AI progressing too fast?

A recent post from Fortune Magazine shows an apparent open letter with more than a thousand signatures so far including Elon Musk (CEO of SpaceX, Tesla & Twitter), Steve Wozniak (Co-founder, Apple), Stuart Russell (Berkeley, Professor of Computer Science) and many others, to pause for at least 6 months any further developments in models more powerful than GPT-4. The letter claims consensus is needed to evaluate possible risks of this kind of technology and their positive and negative effects in the world.

This kind of concern conjures up images from sci-fi movies such as “Terminator” where AI systems take over. We are still far away from the creation of General AI capable of that, but even existing new models can directly affect thousands of jobs, companies and governments. So perhaps it’s not a bad idea to take a pause, the same way we were careful with nuclear power, and the same way we are taking a critical look now at the impact of social media platforms on society.

Pragmatic Advice: Leveraging GPT-4 for Search

The AI revolution is here, powered by models like GPT-4. What comes next? How to leverage GPT-4 for search?

Data is the currency of the Internet, and the value of data is directly related to whether or not it can be easily searched for and found. Tools like GPT-4 have the potential to redefine the search experience we expect.

GPT-4 can be used as an extension of traditional search where NLP processing for the user will enhance and drive better search queries to custom data. There are even applications where you can interact with your documents and search for specific information or help in better understanding of the reader.

For now, it will not completely replace traditional search because – as OpenAI freely admits – it can have hallucinations and literally make up facts or make errors in reasoning. Not something you want in a search application where you are trying to discern and discover knowledge. But these AI models can definitely help in question and answering, data analysis, knowledge graph generation and keyword driven search. And they could accelerate the evolution of the traditional search bar to a more conversational interface.

We are just seeing the beginning of a revolution in how AI world will drive new content-rich applications. I hope this blog helped you understand the key differences and enhancements that GPT-4 has to offer.

As always, please CONTACT US if you have any comments or questions, or to request a free consultation to discuss your ongoing search and AI projects.

– Fabian

RELATED RESOURCES

Other Blogs in this Series

- What is ChatGPT? AI and Search Perspectives (Part 1 of 3)

- What is GPT-3? Search and AI Perspectives (Part 2 of 3)

- What are Large Language Models? Search and AI Perspectives (Part 3 of 3)