Martin Bayton

BERT: A pivotal development in search

BERT (Bidirectional Encoder Representations from Transformers) is a Natural Language Processing (NLP) model developed by Google. It revolutionizes language understanding by capturing bidirectional context for each word in a sentence, enabling a more nuanced representation of words based on their surrounding context. BERT’s contextual embeddings have been widely applied in various NLP tasks, enhancing the accuracy and sophistication of language-related applications.

Word embeddings

Word embeddings are numerical representations of words that capture their meaning and relationships to other words. By representing words as vectors in a high-dimensional space, word embeddings allow for the calculation of semantic similarity between words, phrases, and documents. This facilitates search algorithms to identify documents that are conceptually related to a query, even if they do not share the exact same keywords.

Traditional word embeddings

Traditional word embedding methods, such as Word2Vec and GloVe, represent each word with a fixed-length vector, regardless of the context in which the word appears. This means that the contextual meaning of the word is not considered when generating the embedding. As a result, these methods can struggle to capture the nuances of language and may produce inaccurate embeddings for words that have multiple meanings or that are used in different ways in different contexts.

Contextual word embeddings

BERT, on the other hand, generates contextualized word embeddings, which means that the embedding for a word will change depending on the context in which it is used. This is because BERT considers the entire sentence or paragraph when generating the embedding for a word, which allows it to capture the meaning of the word in that specific context.

For example, the word “bank” can have different meanings depending on the context. In the sentence “I went to the bank to deposit some money,” the word “bank” refers to a financial institution. However, in the sentence “The river flooded its banks,” the word “bank” refers to the edge of a river. BERT is able to capture these different meanings by generating different embeddings for the word “bank” in each sentence.

Contextualized word embeddings have a number of advantages over traditional word embeddings. They are more accurate and capture the nuances of language more effectively. They are also more versatile and can be used for a wider range of NLP tasks.

BERT’s effectiveness is attributed to its extensive pretraining on large datasets. By exposing the model to vast amounts of diverse text from the internet, BERT learned to understand the intricacies of language, including the contextual nuances that play a crucial role in comprehending user queries.

Vector Search

Vector search can leverage BERT’s contextual word embeddings to bridge the gap between natural language queries and content. By representing both queries and documents as vectors, search algorithms can calculate their similarity enabling the retrieval of documents that align closely with the user’s search intent. This approach goes beyond exact keyword matching, allowing for the retrieval of semantically relevant information.

Development Path

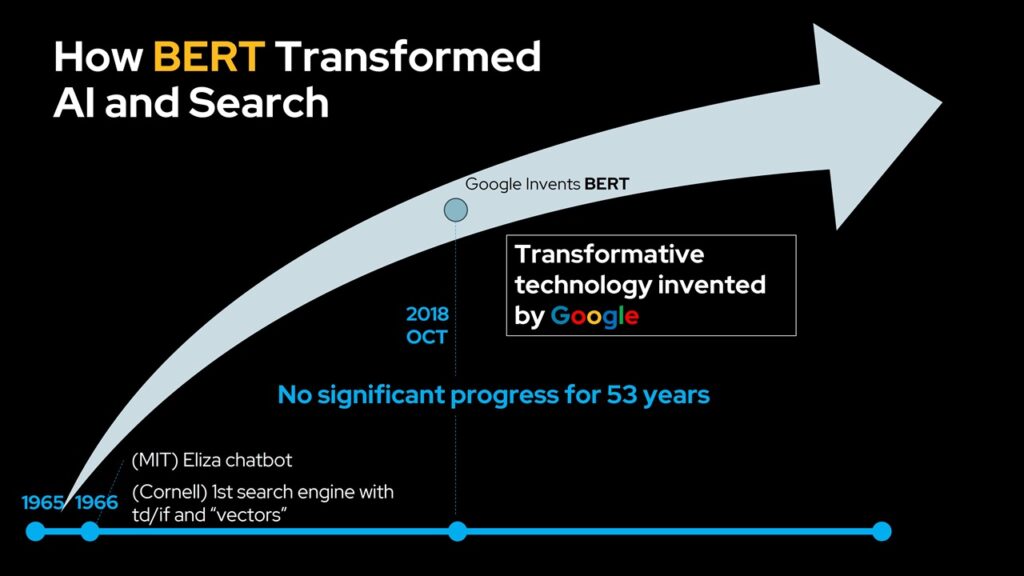

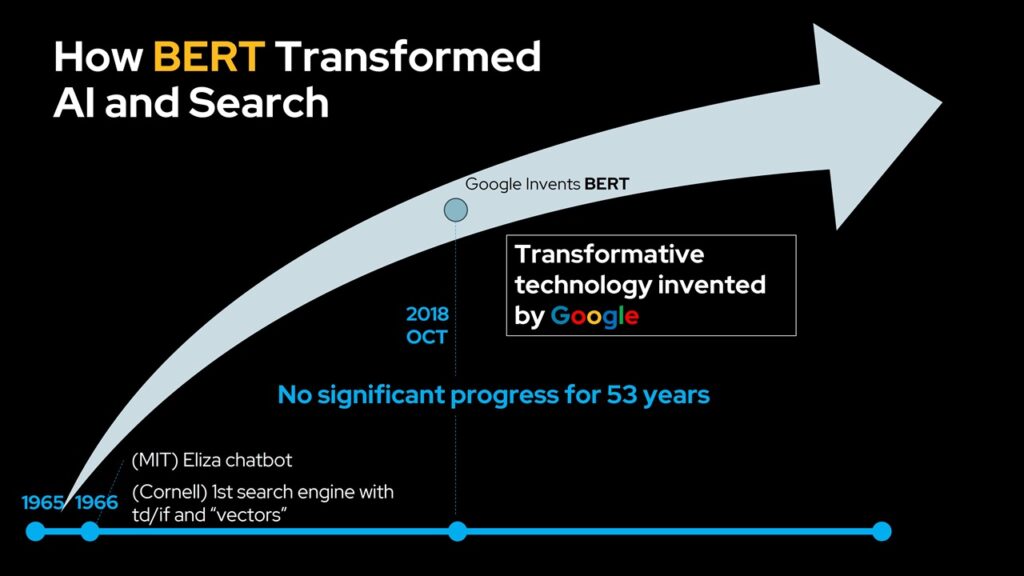

BERT officially entered the scene in October 2018. The BERT model was introduced in the paper titled ‘BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding’ written by Google researchers, including Jacob Devlin and Ming-Wei Chang. It was open-sourced in November 2018. This release made the model freely available to the public, enabling researchers and developers to build upon its capabilities and explore new applications. The open-source release of BERT marked a significant turning point in the field of NLP, fostering collaboration and innovation across the community. Finally, BERT was integrated into Google Search in late 2019. This integration marked a key moment in the evolution of search engines, as BERT significantly improved the understanding of user intent and delivered more accurate and relevant search results.

BERT’s Legacy

BERT represents a pivotal moment in the journey towards search that understands and responds to human language in all its richness and complexity. Its revolutionary bidirectional approach to language has profoundly impacted Natural Language Processing and search technology. By considering the full context of words in a sentence, BERT delivers deeper semantic understanding.

As NLP continues to evolve, BERT’s legacy will undoubtedly endure. Its influence has shaped the direction of research and development in the field, paving the way for even more sophisticated and human-centric Artificial Intelligence (AI) interactions. At the forefront, models like GPT-3 and GTP-4 from OpenAI are now pioneering amazingly human-like text generation powered by transformer architectures.

If you have any further questions of comments, please feel free to CONTACT US.

Additional Resources

- Understanding searches better than ever before

- Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing

- Transformer: A Novel Neural Network Architecture for Language Understanding

- Paper Walkthrough: Bidirectional Encoder Representations from Transformers (BERT)