Defining RAG and implementing it – the right way.

AI has ripped through the search landscape, rewriting the rules for businesses like yours. But third-party AI tools can’t cut it themselves. They lack the understanding of your specific content. Enter Retrieval Augmented Generation (RAG): a game-changer that personalizes search, tailoring results for your users on your unique data. It’s not just about keywords; it’s about meaning, context, and understanding.

But as you navigate this new frontier, questions are sure to arise:

- What is RAG, and how does it magic happen? Imagine a powerful search engine trained on your content, not the vast, generic internet. That’s RAG’s secret sauce.

- Does this mean the death of keywords? Not quite. Think of RAG as your new AI-powered pilot for search applications, but keyword search is still the co-pilot.

- What’s the payoff? Boosted efficiency, better customer experiences, and insights you never knew existed. RAG unlocks the hidden potential within your data, fueling informed decisions and driving business growth.

- Challenges? We’ve seen them all. At Pureinsights, with 25 years and hundreds of successful search implementations under our belt, we’ve tackled every hurdle in the book. We’re with you every step of the way, ensuring that you only implement RAG only once – the right way.

Ready to unleash the power of AI-powered search? Read on to learn more. Then contact us and we’ll help you tailor RAG to your specific needs, unlocking a new era of search efficiency and business success.

What is RAG?

RAG is a novel approach to natural language processing (NLP) that combines the power of large language models (LLMs) with the precision of information retrieval systems. RAG models are trained on a massive dataset of content, including text, code, images, and even sounds. They often leverage existing general LLMs from Google, Microsoft / OpenAI, Amazon or Meta. But they also have access to an external knowledge base, which allows them to generate more accurate and informative responses.

What is vector search and how does it fit in with RAG?

Vector search is a powerful technique for finding similar items in large datasets, especially unstructured data like text, images, and audio. Instead of relying on keywords, it uses mathematical vectors to capture the meaning and context of the data. You can read more in our blog Vector Search vs Keyword Search.

RAG is basically a use case of vector search. Vector search plays a crucial role in the Retrieval-Augmented Generation (RAG) architecture. It acts as the information retrieval engine that fuels the LLM (Large Language Model) responsible for generating responses.

You can read more about the RAG architecture and the role of vector search in our detailed blog explaining Retrieval Augmented Generation.

Why implement RAG?

In the simplest terms, RAG is an architecture that applies AI technology to allow users to search and answer questions based on your content. It also reduces the risk of “hallucinations” where generative AI can create undesired or incorrect answers to submitted queries.

Whether you are operating search on an e-commerce website, support portal, or specialized knowledge application, this is critical to helping you control and deliver the right experience to your users. At the same time, RAG lets you create a new conversational search paradigm deployable to search bards, chatbots, support assistants, and mobile applications.

Will RAG replace Keyword Search?

The short answer is no. While some applications may be suitable for a pure RAG implementation, many users will still want a hybrid search solution that leverages both AI and traditional keyword search. Users may want the ability to ask complex questions like “what are the best-selling running shoes?” but they will also want to type in traditional keyword search terms like “black Nike running shoes.”

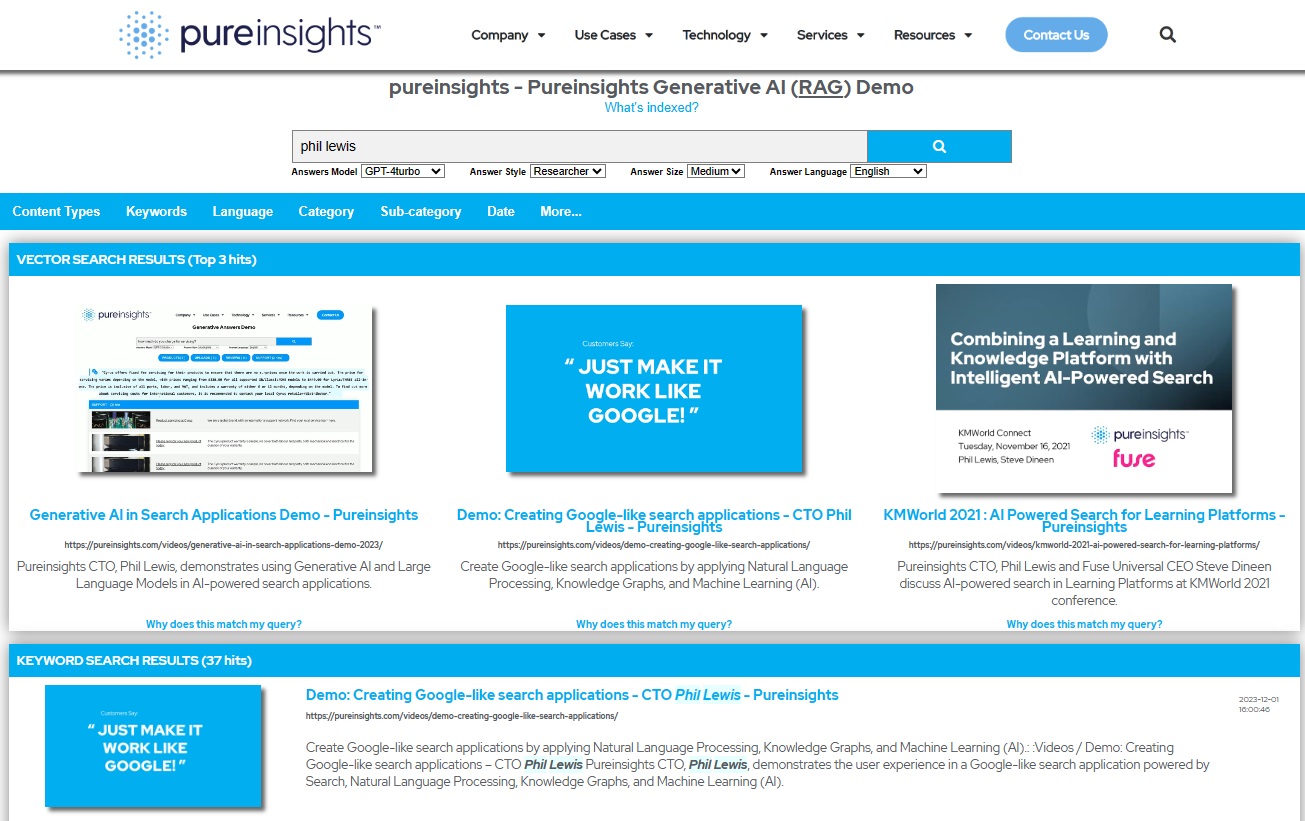

Hybrid Search example with RAG

Let’s see an example implementation of Hybrid Search and RAG on our demo platform to search the Pureinsights website. In this example we simply type in the phrase “Phil Lewis” (our CTO). The application determines that this is a keyword search and serves up a list of results with the best matches using vector search and keyword search. These results reflect blogs and content authored by Phil.

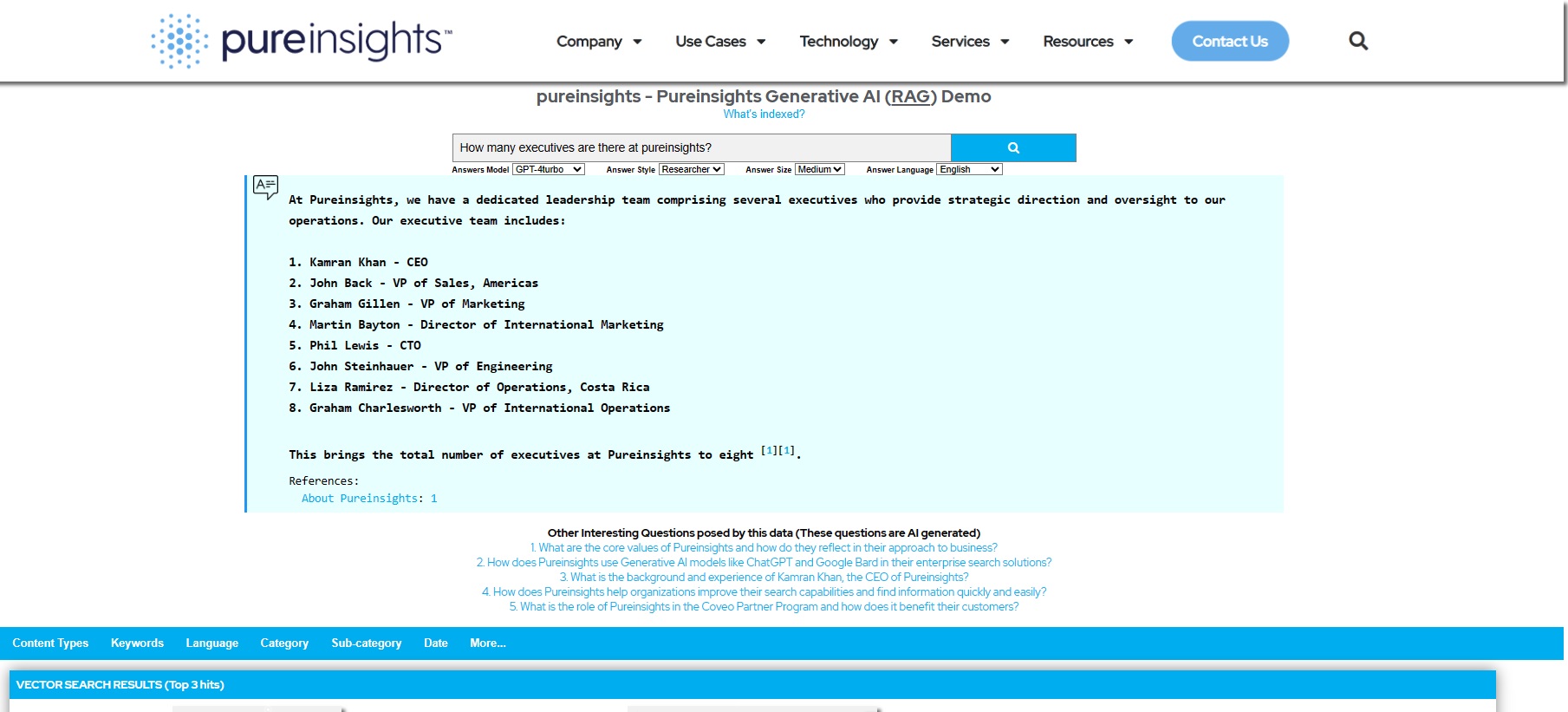

Now let’s try a fully phrased question like “how many executives are there at Pureinsights.” The image below shows an answer to the question.

What’s important to note is that this is a truly AI-generated answer (using the GPT4turbo LLM). While there is an “About Us” page on the Pureinsights website that lists the executives, the actual count doesn’t exist anywhere on the website. The AI models interpreted the query and created the answer: “the total number of executives at Pureinsights is eight.” This distinguishes the solution from an extractive answers or “featured snippets” approach where the exact answer has to already exist somewhere in the crawled content.

The solution also lists a reference for the answer, which provides transparency, and also provides a list of vector and keyword search results, as you would expect from a hybrid search implementation. What’s not so obvious is how transformative this solution really is. By leveraging an LLM in a RAG architecture, we are able to provide answers in multiple languages (even though the original content is English), and at different levels of expertise. Imagine an auto parts website where you can ask a complex question and get a different answer if you are a novice consumer or an expert mechanic.

Watch a short demo on search and AI by our CTO, Phil Lewis here: Generative AI in Search Applications: Demo – Pureinsights

Benefits of implementing RAG and Hybrid Search

Besides the obvious benefit of generating answers only on your content for your users, with RAG and Hybrid Search, your applications can deliver:

- More accurate and relevant results: Less time wading through irrelevant links, and less risk of “hallucinations.” RAG and Hybrid Search zero in on what users need, understanding the context and nuances of their queries.

- Personalized insights: Get results tailored to specific user needs and interests. With the incorporation of query history, RAG can consider your users’ background knowledge and preferences to deliver tailored information.

- Enhanced productivity: Unleash the power of generative models. RAG can use retrieved information to create original content summaries, or even answers to detailed questions, all in a natural and engaging way.

- Improved data integration: Seamlessly incorporate private or domain-specific data into your search. RAG can access and leverage additional sources not accessible to traditional search engines.

- Greater transparency and auditability: Understand the source of your information. RAG allows you to trace the retrieved documents used to generate your results, fostering trust and confidence.

Challenges and pitfalls to avoid with Retrieval Augmented Generation

While there are exciting benefits to realize by implementing RAG and hybrid search, there are challenges to consider.

- Content processing is still important: While it may seem like AI makes dictionaries, ontologies, and metadata less important, the reality is that ignoring them can lead to bad search results and incorrect answers. Introducing the wrong content can also exacerbate rather than fix the “hallucination” problem.

- LLMs can impact performance and cost: As a third-party introduced into your architecture, LLMs can introduce unpredictable performance issues and escalate operational costs for your search application.

- You still have to understand search: implementing hybrid search with RAG still requires specialized search expertise like managing search indices, creating searchable vector databases, and developing UIs that can include faceting and other features in addition to a simple chat-like interface.

You can read even more details in our blog 5 Common Challenges Implementing Retrieval Augmented Generation (RAG).

You can implement RAG many times through trial and error. Or once with Pureinsights.

Building cutting-edge search solutions with AI can be deceptively playful in early stages. Remember those “plug-and-play” promises of early search vendors? While open-source options like Solr and Elasticsearch offer flexibility, navigating the complexities of AI search to achieve enterprise-grade scalability, performance, and security remains a significant hurdle.

At Pureinsights, we bridge the gap between AI promise and production reality. We’ve helped leading brands like Mintel unlock the power of Retrieval Augmented Generation (RAG) to revolutionize their search platforms. Now, we’re bringing this expertise to information portals, manufacturers, and retailers, helping them adapt and thrive in the age of AI-powered search.

Our combination of deep consulting, complementary technology, and managed services ensures a smooth journey from vision to impactful results. Contact us for a personalized consultation and discover how AI search can elevate your business.

Additional Resources

- Free E-Book: Unleashing AI-Powered Search – Pureinsights

- What is Retrieval Augmented Generation (RAG)? – Pureinsights

- 5 Common Challenges Implementing Retrieval Augmented Generation (RAG) – Pureinsights

- Comparing Vector Search Solutions 2023 – Pureinsights

- Vector Search vs Keyword Search – Pureinsights

- What are Large Language Models? Search and AI Perspectives – Pureinsights

- Mintel – RAG Case Study – Pureinsights